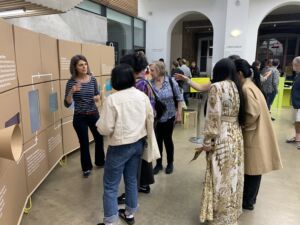

As part of the London Design Festival, VA-PEPR participated in the Digital Design Weekend 23

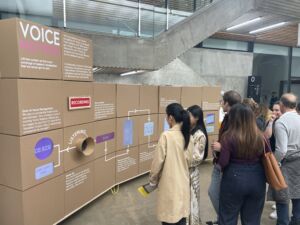

VA-PEPR project’s interactive installation had been invited to be displayed during the Digital Design Weekend 23 at the Victoria and Albert Museum in London, as part of the London Design Festival 🎉 👏

The team showcased “Voice Matters” which is a thought-provoking installation exploring the fascinating intersection of AI and human interaction. The installation delved into the power of AI to listen, understand, and interpret our conversations 🤖 Participants from all ages experienced firsthand how much of our daily conversations can be comprehended and summarized by the smart devices that surround us.

Big congrats to Michael Shorter, Jon Rogers, Melanie Rickenmann, Aurelio Todisco, Aysun Aytaç, Sabine Junginger and Edith Maier!

The installation soon to be displayed at the School of Art and Design, Lucerne University of Applied Sciences and Arts (HSLU D&K). Stay tuned!

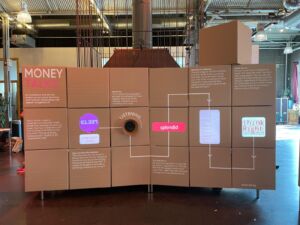

How does VOICE MATTERS work?

The Microphones

The microphone array in Voice Matters is connected to a series of Raspberry Pis and runs Microsoft’s cloud-based AI, Azure. Together, they are constantly listening, understanding and translating everything that has heard. This data is then added into an ever growing text document that is then analysed in various ways. This is displayed across the 7 screens of Voice Matters.

Screen 1: Cost of Voice Recognition

This screen displays the cost of running Voice Matters, which includes Microsoft’s real-time speech to text service and OpenAI’s Chat GPT service. We pay to constantly listen to our environment. We wanted viewers to consider if they would be happy to pay this. Or should the donation of their data to technology companies be enough?

Screen 2: The Last Word

This screen displays the last word Microsoft’s speech to text service thinks it heard. This allows viewers to see how accents are understood, but also see how sensitive the hardware is.

Screen 3: Machine Confidence

The technology is not 100% confident in its own ability to hear accurately. This screen lifts the lid on this black box technology and allows viewers to see how confident the AI was in all the words that it heard. And more interesting the words that it did not hear: the words where machine learning is used to fill in blanks.

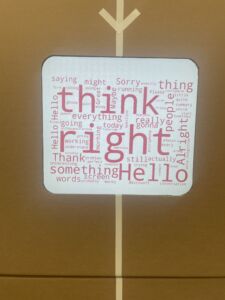

Screen 4: Word Cloud

This screen displays a word cloud of what the AI has heard. The larger the word, the more often it was heard.

Screen 5: Themes

This is where we use the power of ChatGPT to analyse the voice data and try to create a meaningful summary. This screen displays the output from prompting ChatGPT to generate the top five themes in order of importance.

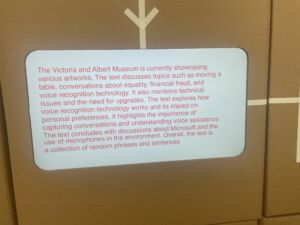

Screen 6: Summary

This screen displays the output from prompting ChatGPT to generate a 75-word summary from the voice data.

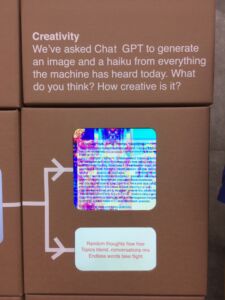

Screen 7: Creativity

How creative is ChatGPT? This screen generates an image and a haiku from everything the machine has heard.