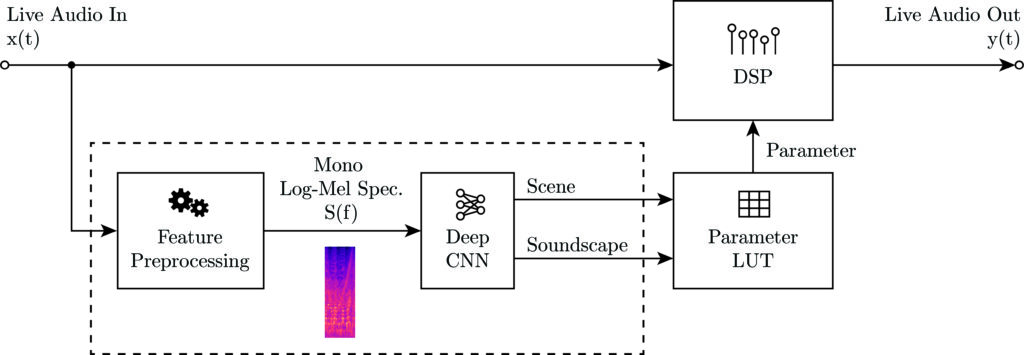

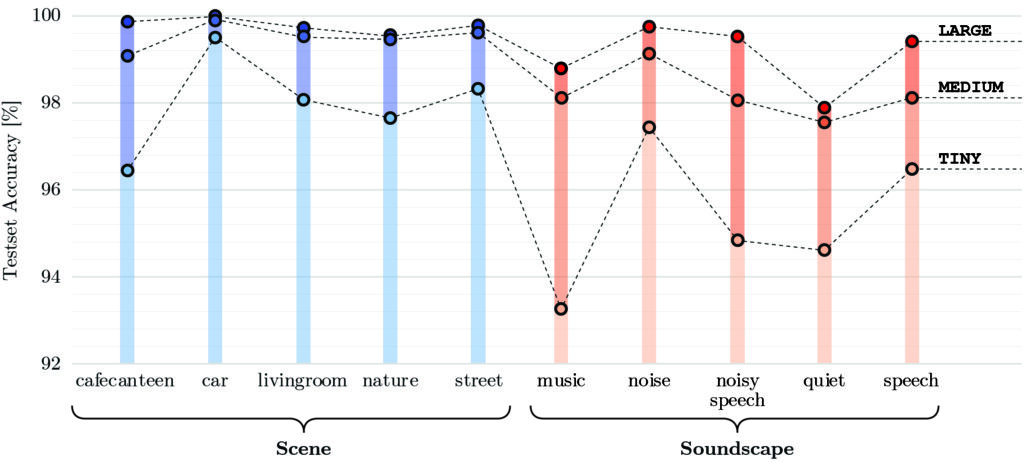

Acoustic signal processing is often accompanied by adaptive filtering in order to achieve optimal audio quality. In terms of hearing aids, the intention is an optimal speech intelligibility and environmental audio perception. We introduce a system which continuously recognizes acoustic environments using Artificial Intelligence (AI) in the form of a Deep Convolutional Neural Network (CNN) with focus on real-time implementation for adjusting hearing aids parameters. For training a custom dataset was acquired consisting of 23.8h of high-quality binaural audio data including five classes per label. Using a manual Grid Search method, three models with respect to different complexity metrics were optimized for a trade-off between accuracy and throughput. CNNs were then post-quantized to 8-bit which achieved an overall accuracy of 99.07%. After reducing the number of Multiply-Accumulates (MAC) by factor 154x and parameters by 18x, the classifier was still able to detect scenes and soundscapes with an acceptable accuracy of 94.82% which allows real-time inference at the edge on discrete low-cost hardware with a clock speed of 10 MHz and one inference per second.

Studienbetreuer: Jürgen Wassner