+FO Artificial Intelligence – Creative Practices and Critical Perspectives 2024

Kontakt: MediaDock, Thomas Knüsel

+FO Artificial Intelligence – Creative Practices and Critical Perspectives

How algorithms learn to see and to create images

Nicolas Malevé

2.+ 3. September | 9:15 ~ 12:00 / 13:00 ~17:00 | physical

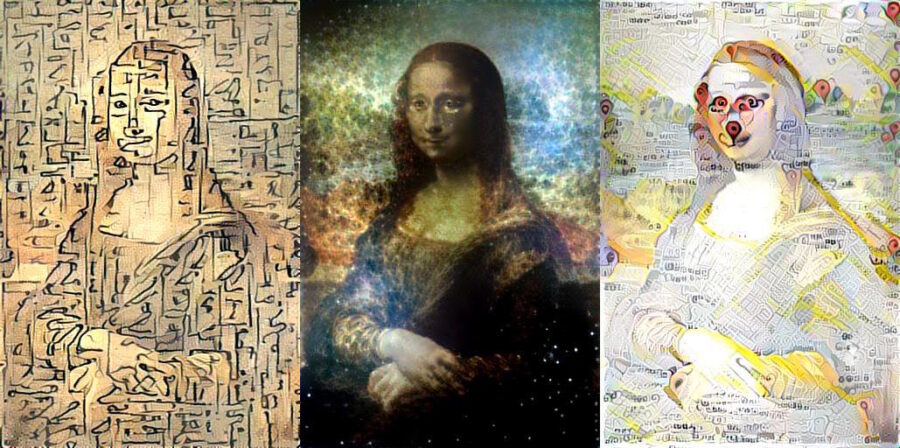

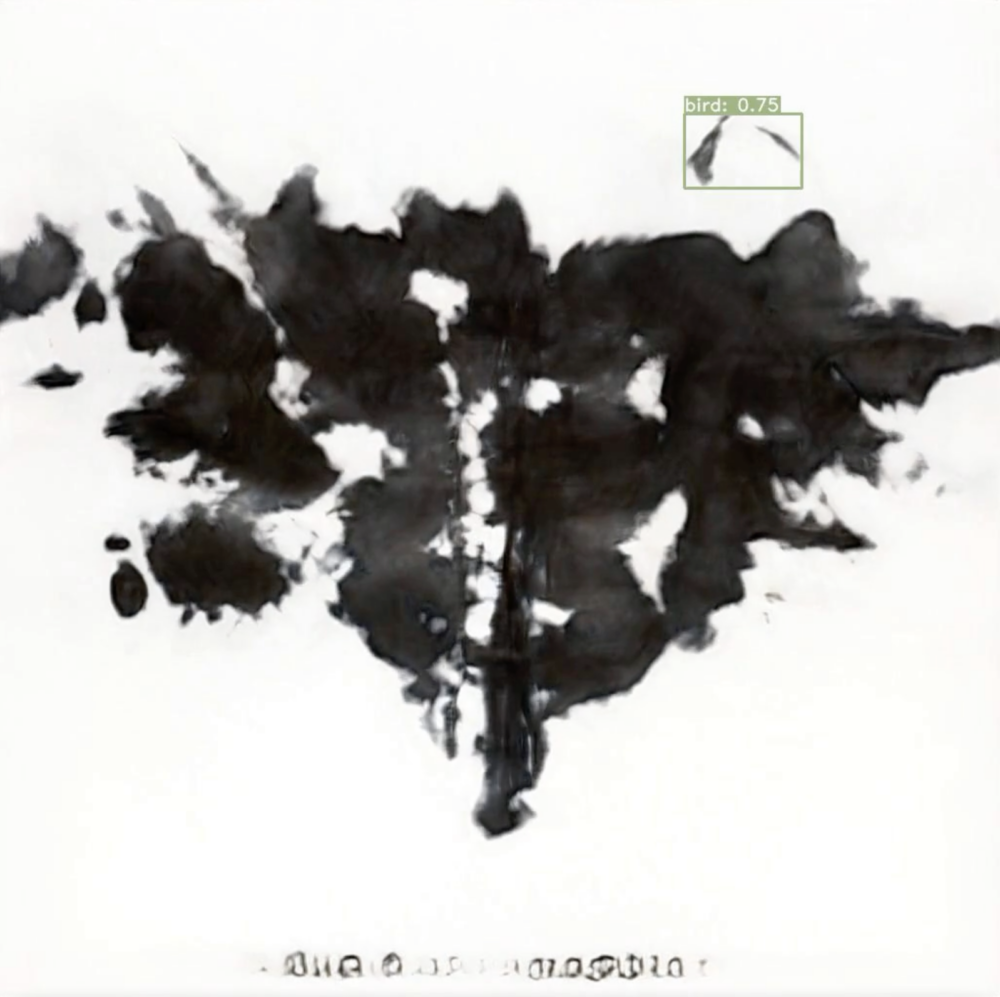

Every day, images are published by millions on the Internet. And these constitute only a fraction of all the images that are produced and archived. Computer Vision algorithms are designed to make sense of this sheer mass of visual content. They play a central role in the management of image traffic on the networks, as well as in the preparation of diagnosis in medicine or in analysing never ending footage of surveillance imagery. Algorithms also generates new images (eg. Dall-e, Midjourney, Stable Diffusion). From deep fakes that can map one’s face to a famous actor video or politician to deep dreams where machine algorithms produce their own hallucinated version of the world, the visual techniques powered by artificial intelligence have largely infiltrated film production and even traditional software such as Adobe Photoshop. This evolution has lead to spectacular results and in return has received a lot of coverage

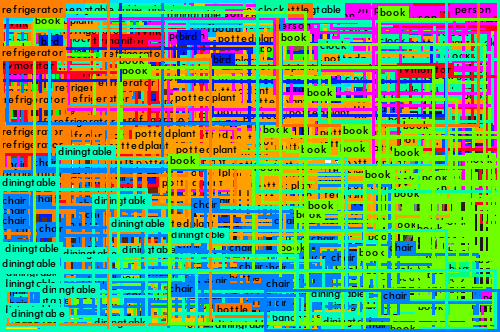

What is less discussed publicly is how machines acquire the ability to interpret and generate images. Machine learning algorithms learn what is an image and what it represents from series of examples selected by humans. To teach machines how to see, computer scientists curate visual datasets. These datasets can be understood as large collections of photographs. State of the art datasets contain billions of items. These last years have seen a proliferation of datasets. From animals to computer parts, mangas to clothing, the visual world is collected and classified at an extraordinary scale.

In this workshop, we will explore these large collections of photographs, how they are assembled, where they come from, how they can become objects of inquiry or material for creation. We will also explore what it means to look at those extraordinary amount of images, to classify them and to curate them. We will playfully learn the importance of images, and in particular photographs, for teaching computers how to see. We will experience what kind of vision and speed correspond to the model of vision used by algorithms. And we will delve in prompt-engineering to push generative AI outside of its comfort zone. This will allow us to ask new questions about the evolution of the technology that constitute a key component of our visual practices as artists and citizens.

Practical Introduction to AI Tools

Guillaume Massol

4.+ 5. September | 9:15 ~ 12:00 / 13:00 ~17:00 | physical

In the second workshop we will familiarise ourselves with how machine learning works in practice. After a short introduction, you will have the opportunity to try out different ML models and their different applications with an AI toolkit. Once you are familiar with the tools, you will start to work out your own individual machine learning experiments.

In the second workshop we will familiarise ourselves with how machine learning works in practice. After a short introduction, you will have the opportunity to try out different ML models and their different applications with an AI toolkit. Once you are familiar with the tools, you will start to work out your own individual machine learning experiments.

External Links:

https://blog.massol.me/author/guillaume/

https://github.com/gu-ma

Doing Critical Technical Art Practice

Winnie Soon

5. September | 17:00 ~ 18:15 | online

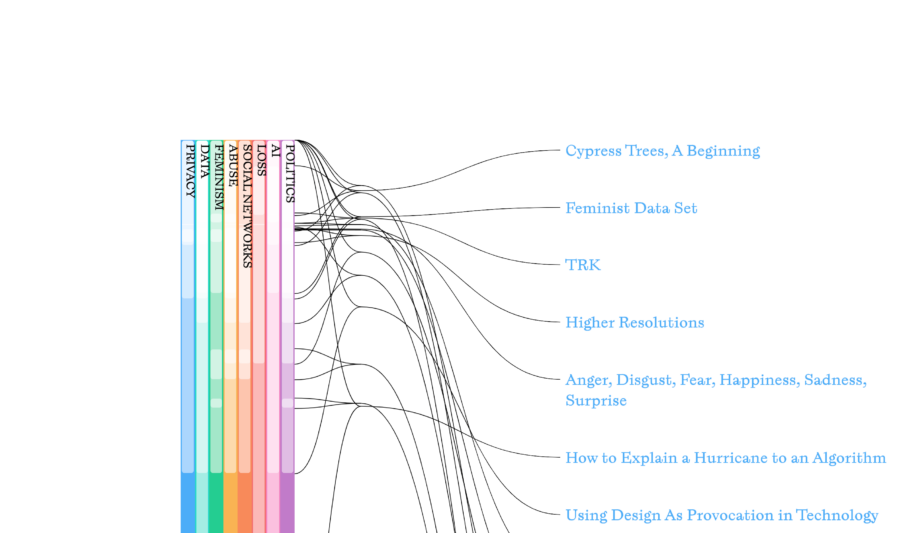

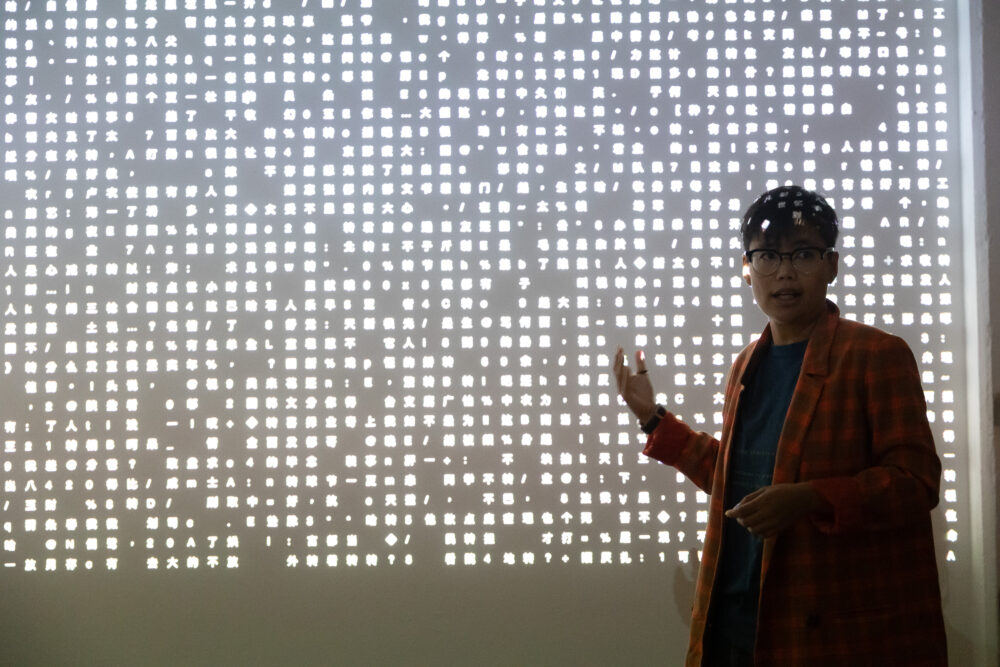

This talk delves into how Machine Learning (ML) and Natural Language Processing (NLP) can be explored as modes of inquiry through the lens of critical technical art practice. Moving beyond their predictive functions, I employ these technologies in my artistic work to raise new questions and possibilities for understanding and reimagining our relationship with artificial intelligence.

This talk delves into how Machine Learning (ML) and Natural Language Processing (NLP) can be explored as modes of inquiry through the lens of critical technical art practice. Moving beyond their predictive functions, I employ these technologies in my artistic work to raise new questions and possibilities for understanding and reimagining our relationship with artificial intelligence.

By engaging with data(sets), code and algorithms, I investigate the intersection between coding and thinking. Inspired by scholars such as Wendy Hui Kyong Chun and Philip Agre, I emphasise «doing thinking»—a process where technical skills and critical inquiry converge for practice-based research.

More Infos:

https://sites.hslu.ch/werkstatt/winnie-soon/

External Link:

Train your own Model

Thomas Knüsel and Alexandra Pfammatter

6. + 9. September | 9:15 ~ 12:00 / 13:00 ~17:00 | physical

In this workshop we will show you how to work with generative AI and train your own AI models. We will look at how generative AI works and explore different ways to control the output of generative AI models. In a second step, we will try to build a dataset to train your own image generator to generate your own style, a specific object or a specific character in different variations.

Selfstudy

10. – 11. September | 9:15 ~ 12:00 / 13:00 ~17:00

12. September | 09:15 ~ 12:00

Time to do your own research or experiments, be curious – feel free to ask for help if you need advice.

Presentation

12. September | 13:00 ~17:00

a very short and brief presentation (max. 15 min per person) of your own research / work of the past Days.

Open Studio

13. September | 09:00 ~ 12:00

Miniexhibition Setup

13. September | 12:00 ~ 14:00

Studiovisit in the other + Focus Modules