AI-powered cancer detection: A research project by Morteza Kiani Haftlang

Morteza Kiani Haftlang is on a mission to harness Artificial Intelligence (AI) for early cancer detection. With a background in engineering, AI, and deep learning, he transitioned into healthcare to apply his skills where they matter most. His research at IMAI MedTec explores self-supervised AI models for detecting cancer in 3D light sheet microscopy (LSM) images, aiming to enhance accuracy and reduce manual labelling. Are you curious about how AI can revolutionise cancer detection? In this interview, Morteza shares insights into his work, the challenges of AI in medical imaging, and how his studies at HSLU have shaped his approach.

Shortcuts: Interview | Info-Events | Programme Information | Contact | Professional Data Science Portraits

Morteza Kiani Haftlang, a graduate of the MSc in Applied Information and Data Science at Lucerne University of Applied Sciences and Arts, conducted his thesis at IMAI MedTec on AI-driven medical imaging for cancer detection.

Introduction

First of all, tell us something about yourself: What hashtags best describe you?

#Learner #Multidisciplinarity #Cook #AGIEnthusiast

Tell us more about the hashtags.

Each of the hashtags reflects an essential part of my personality and professional journey:

- #Learner: Learning constantly is essential in an era of emerging technologies. I strive to keep up with mainstream AI trends, continuously learning and exploring new concepts in AI, healthcare and other fields.

- #Multidisciplinarity: My goal is to connect multiple fields, from AI and medical imaging all the way to engineering.

- #Cook: Cooking is my passion. Just like in AI, combining the right ingredients – data, models, and algorithms – leads to the best outcomes.

- #AGIEnthusiast: I am obsessed with the future of artificial general intelligence and its potential to transform industries, particularly healthcare.

About your job: What did you do at IMAI MedTec?

At IMAI MedTec, my thesis focused on a comparative study of various models, particularly self-supervised AI models, to improve cancer detection from 3D histopathological images. In essence, the goal is to identify and label cancerous cells accurately. My work involves researching, training, and fine-tuning deep learning models that help pathologists to analyse tissue samples more accurately and efficiently. By reducing the need for manual annotations, we can make cancer screening faster and more precise.

What did you do before?

Before my thesis, I did an internship at Roche, where I was involved in data engineering and data analysis, working with data from production lines and blood sensors. Prior to Roche, I was an electrical engineer. So, you can see how my career has changed. I switched to healthcare AI because I wanted to apply my skills to a field where technology can save lives. The opportunity to work on innovative medical imaging at IMAI MedTec was too exciting to pass up – so that’s how my project started.

The project

Please tell us about your research project.

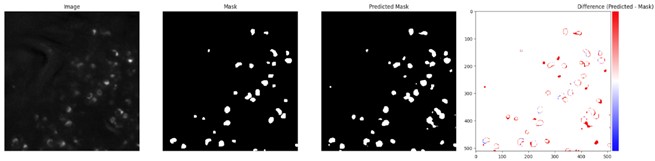

My research focused on self-supervised deep learning models for detecting cancer in 3D light sheet microscopy (LSM) images. Traditional histological analysis often overlooks cancerous cells due to limited tissue sampling, which can result in false negatives – up to 20% of cases may miss cancer cells. By leveraging AI, we aim to analyse entire tissue samples in 3D, thus reducing the risk of missed diagnoses.

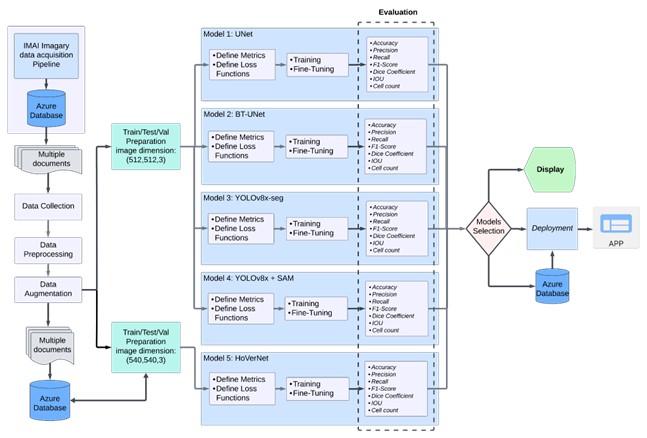

We applied the models considered in this study – U-Net, BTUNet, YOLOv8x-seg, YOLOv8x+SAM, and HoverNet – to a dataset that IMAI provided. The project compared multiple models to find the optimal balance between accuracy and efficiency in segmenting cancerous cells.

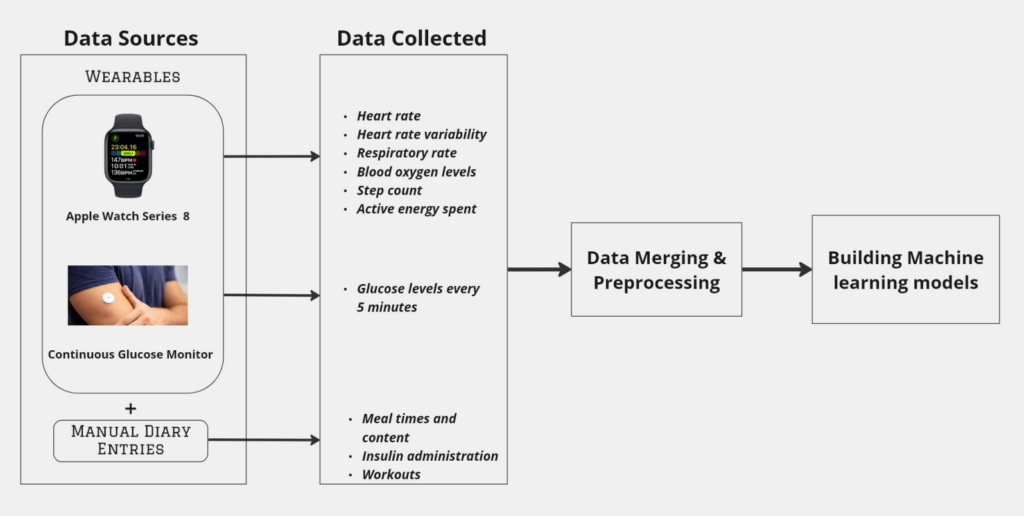

What data and method did you use, and what insights did you gain or do you hope to gain?

We worked with high-resolution 3D LSM images of histopathological tissue samples. Because of their size (sometimes up to 100 GB) and complex content, these images have to be pre-processed as well as normalised, augmented and adapted to formats that are friendly to deep learning.

We evaluated five key models:

- U-Net: A classic segmentation model widely used in biomedical imaging.

- BTUNet: A self-supervised learning version of U-Net utilising Barlow Twins.

- YOLOv8x-seg: A real-time segmentation model optimised for speed.

- YOLOv8x+SAM: A hybrid model incorporating the Segment Anything Model (SAM).

- HoverNet: A powerful dual-task model designed for histopathology.

Results and Findings

How can your insights help our society?

Our research provides valuable insights that can significantly enhance cancer detection and diagnosis. Among the models we evaluated, HoverNet demonstrated the highest segmentation accuracy, making it the most reliable choice for precise cancer detection. BTUNet excelled in handling limited labelled data, proving the effectiveness of self-supervised learning while delivering more stable prediction results. Meanwhile, YOLOv8x-seg stood out for its speed, making it a strong candidate for real-time applications, though with a slight trade-off in segmentation accuracy.

Early cancer detection saves lives. By improving segmentation accuracy, enabling better auto-labelling, and reducing reliance on manual annotation, our research contributes to:

- Increased diagnostic precision, minimising the risk of false negatives.

- Automation of tedious tasks, allowing pathologists to focus on more complex cases.

- Enhanced accessibility, making advanced diagnostics feasible even in low-resource settings.

What are your goals for your project in future?

As I look ahead, I see several key directions in which to develop this project further. One major focus is to make the model more robust by training on a more diverse dataset to ensure better generalisation across different tissue types and conditions. Additionally, optimising model architectures for real-time deployment will help reduce processing times, making AI-assisted diagnostics faster and more efficient. Another exciting approach is integrating multi-modal imaging data; for example, by combining MRI with histopathology to provide a more comprehensive analysis of cancerous tissues. Ultimately, the goal is to apply AI-assisted diagnostic tools in real-world clinical settings and to bridge the gap between research and practical medical applications and thus improve patient care.

How did your studies in the Applied Information and Data Science programme influence the project?

My background in engineering, AI and deep learning provided the technical foundation for this research. Additionally, my studies at HSLU helped me develop a more structured problem-solving approach, which was crucial when working with large-scale medical datasets and conducting deep-learning experiments. More importantly, though, the invaluable support from my supervisor, Dr Umberto Michelucci, pointed me in the right direction and played a key role in shaping this project.

What advice would you give to others starting on similar projects?

Understanding the domain is crucial – working closely with medical experts ensures that AI models align with real-world needs and address practical challenges. At the same time, data quality is just as important as the model architecture. After all, preparing data from scratch can be as demanding as extracting oil from an offshore field, requiring significant effort and precision. Experimentation and iteration play a key role in improving performance, making it essential to try different models, loss functions, and augmentation techniques while also learning from existing research and best practices in the field. Lastly, patience is vital, as data-driven projects in med-tech are often time-consuming due to complex data structures and ethical considerations. But persistence and careful refinement will ultimately help you make meaningful progress.

And finally: What new hashtag are you aiming for in future?

#CollectiveLearning: I truly believe that progress in AI isn’t an individual pursuit – it’s a collective journey. By sharing research, collaborating across disciplines and keeping an open mind, we can learn from each other and move forward together. AI has the power to transform our world, but only if we build it transparently, inclusively and ethically. I want to be part of a future where knowledge is shared rather than hoarded and will improve everyone’s lives through the power of AI. Only by working hand in hand can we create an AI-powered world that benefits everyone.

We want to thank Morteza Kiani Haftlang for his dedication and for sharing these valuable insights.

Data is the resource of the 21st century!

Register and join us for a free online Information-Event:

Monday, 11 August 2025 (Online, English)

Monday, 8 September 2025 (Online, German)

Monday, 6 October 2025 (Online, English)

Monday, 3 November 2025 (Online, German)

Programme Info: MSc in Applied Information and Data Science

More Field Reports & Experiences: Professional portraits & study insights

Frequently Asked Questions: FAQ